I was looking for good tutorials on tf.contrib.rnn.BasicRNNCell() and tf.contrib.rnn.BasicLSTMCell(). Old tutorials (even one year ago) are not useful as modules in tf.contrib change from time to time. (Right now I am using API 1.3 version.) Recent tutorials look unnecessarily complicated. Then I realized I actually have Aurélien Géron’s book at hand, worth every dollar paid. Here I offer an example as simple as possible, which served as my starting point of using RNN with tensorflow. (I used to train RNN nets with Torch7, which has both better documentations and community tutorials. Glad it is now ported into Python as well.)

Objective:

Build a net to repeat inputs from last time step. Basically, a net with an one-step memory.

Example:

time = 0 1 2 3 4

input = 0.1, 0.5, 0.3, 0.4, 0.1

output = ?.?, 0.1, 0.5, 0.3, 0.4

(?.? = whatever number)

Code:

import tensorflow as tf

import numpy as np

n_inputs = 1 # number of neurons in input layer

n_neurons = 128 # number of neurons in RNN net

n_seq = 100 # length of training sequences. this is also the back-propagation truncation size

# Input layer.shape = [batch_size, sequence_size, input_size]

X = tf.placeholder(tf.float32,shape=[None,n_seq,n_inputs])

# RNN type. This is not a layer, it is a "layer generator".

# You can replace BasicRNNCell for BasicLSTM or other types.

cell = tf.contrib.rnn.BasicRNNCell(n_neurons)

# Generate the actual net.

# outputs.shape = [batch_size, sequence_size, n_neurons]

# states: not used

outputs,states = tf.nn.dynamic_rnn(cell,X,dtype=tf.float32)

# Connect RNN layer to output layer. Bulky because weights are shared.

y = tf.reshape(tf.layers.dense(tf.reshape(outputs,[-1,n_neurons]),n_inputs),[-1,n_seq,n_inputs])

# Prepare for training.

y_target = tf.placeholder(tf.float32,shape=[None,n_seq,n_inputs])

loss = tf.reduce_mean(tf.square(y-y_target),axis=[1,2]) # shape = [None,]

optimizer = tf.train.AdamOptimizer(learning_rate=0.00001)

train_op = optimizer.minimize(loss)

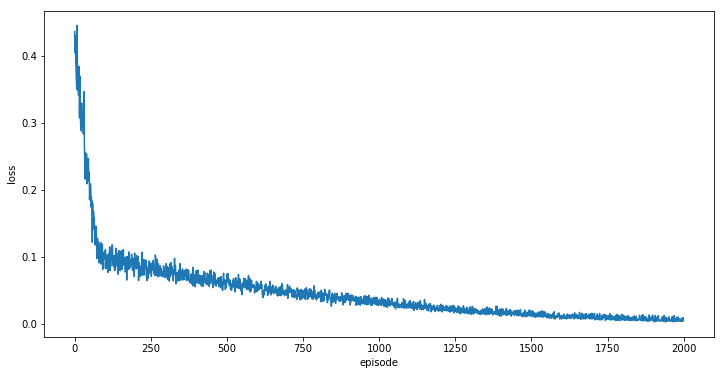

# Train with 2000 episodes, randomly generated.

init = tf.global_variables_initializer()

loss_save = []

with tf.Session() as sess:

init.run()

saver = tf.train.Saver()

for i in range(2000):

X_batch = np.random.rand(1,n_seq,n_inputs)

y_batch = np.roll(X_batch,1,axis=1) # right-shift

loss_val, _ = sess.run([loss,train_op],feed_dict={X:X_batch,y_target:y_batch})

loss_save.append(loss_val[0])

saver.save(sess,'tmp.ckpt')

plt.figure(figsize=[12,6])

plt.plot(loss_save)

plt.xlabel('episode')

plt.ylabel('loss')

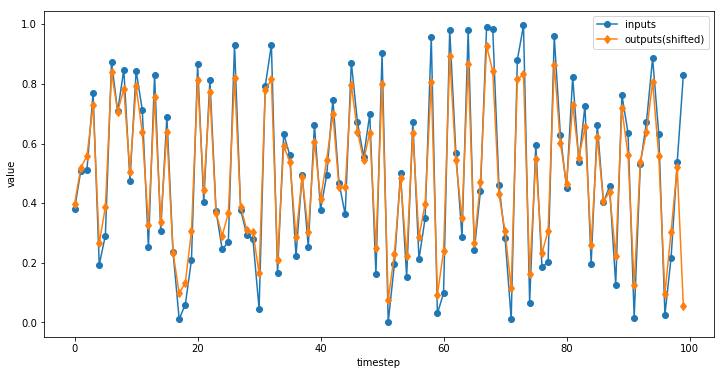

Now I can visualize the results with another randomly generated input sequence, X_batch. The output is left-shifted so it should overlap with inputs.

with tf.Session() as sess:

saver.restore(sess,'tmp.ckpt')

X_batch = np.random.rand(1,n_seq,n_inputs)

y_val = y.eval(feed_dict={X:X_batch})

plt.figure(figsize=[12,6])

plt.plot(X_batch.ravel(),'-o')

plt.plot(np.roll(y_val.ravel(),-1),'-d')

plt.legend(['inputs','outputs(shifted)'])

plt.xlabel('timestep')

plt.ylabel('value')

Ignore the last timestep, because it can not be determined. Overall, the net did a good job repeating the number from its previous timestep. This piece of code can be used to test other RNN implementations.

Download and try it!